This project won the 2017 SONOS design challenge. My partner Ali Decker, an Engineering Psychology student, and I designed and developed a native app that uses computer vision and simple machine learning to instantly curate a playlist from photos you snap.

Accessing personalized music experience

When we asked our friends about their smart speaker and music-app experiences, a recurring pain point was the time and effort it takes to choose or make the right playlist, which was sometimes even stressful for the user depending on the context. We saw choosing the right mix as a barrier to enjoying music and interacting with music apps.

With this in mind, we set out to design an experience that instantly chooses the right music for the mood, relieving the user’s stress and allowing a more enjoyable, but still highly personal, experience. We aimed to relieve the user of the Paradox of Choice: the tendency for user anxiety to increase as the number of options increases, minimize time and clicks to music, and aid decision-making.

Design process

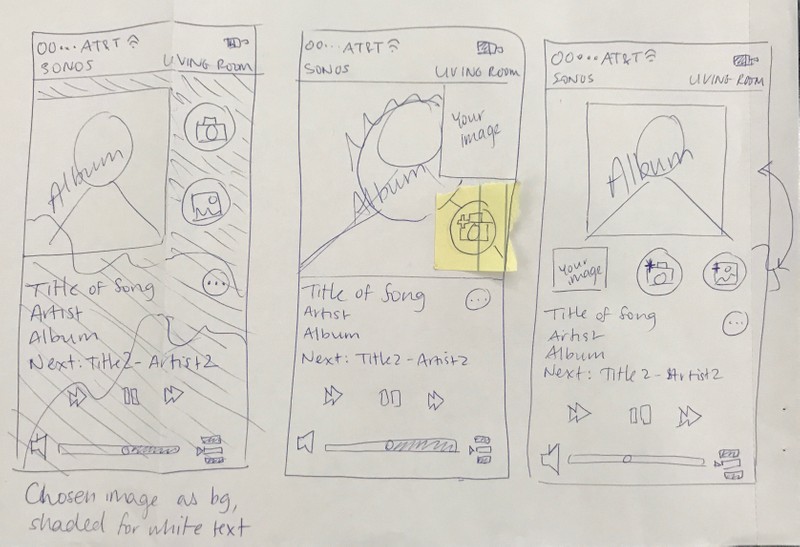

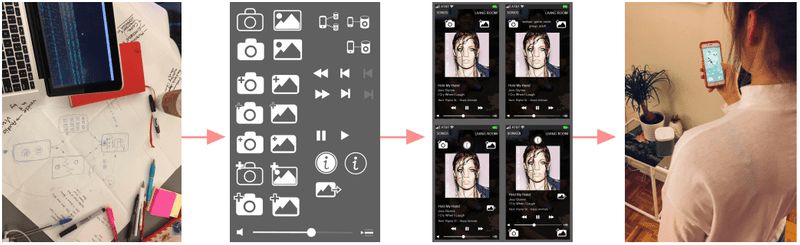

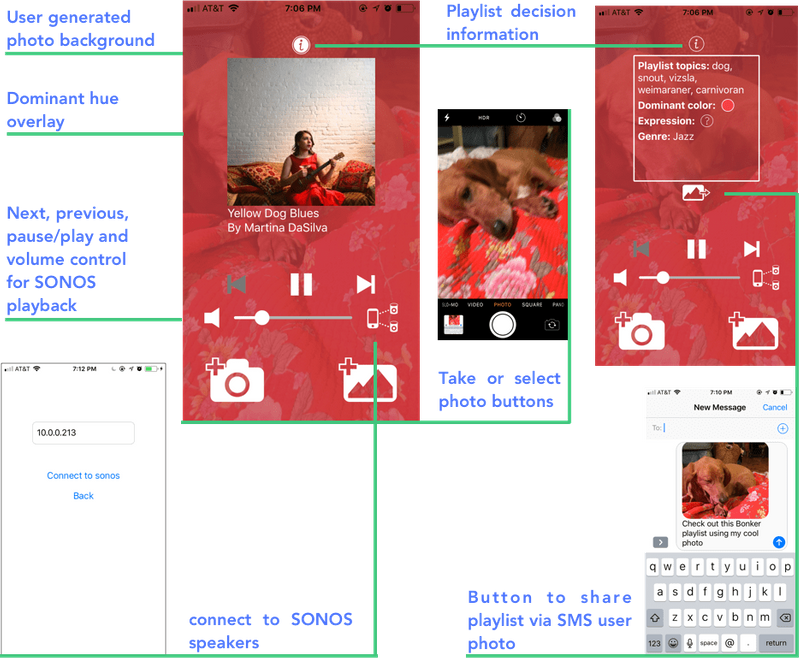

We started with wireframes on paper which became mockups in Illustrator. Then, we conducted informal A-B testing to determine which icon designs fit users’ mental models for the intended function and to ensure primary buttons were the most salient and reachable. Throughout the two weeks we also conducted informal experience testing that drove each iteration and even led to new features.

Design considerations

Human-in-the-loop:

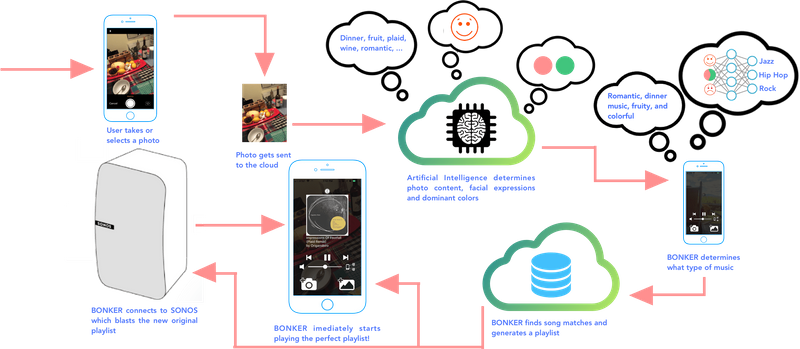

Music apps today use artificial intelligence to generate tailored playlists, but the user has no active input. We wanted to engineer a system where the human is placed in-the-loop by supplying the initial input: snapping a photo. We designed this relationship to facilitate trust between the user and the algorithm, and foster a sense of collaboration in creating the playlist.

Explainable AI:

We didn’t want the decision making process behind music choices to be hidden from users - a black box. Instead, we designed the UI to facilitate transparency and observability in the algorithmic decision making.

- We designed an "i" button that toggles the keywords, facial expressions, and color details from photos that inform the user of BONKER’s music choice relative to their photo.

- We created a background overlay that reflects the dominant color in the photo., This increased the perceived link between colors in the pic and music “mood.” We also placed the user-generated photo behind this overlay to reinforce the connection between the photo contents and each music track.

Development

I built the app using React Native, a JavaScript library for building native apps that are supported by both iOS and Android. I leveraged Expo’s React Native developer tools to build, test, and deploy prototype iterations. The AI is handled by several different Tensorflow convolutional neural network models I deployed on Google Cloud's ML engine: object detection, facial expression, and image properties, such as dominant color. Then, using a dataset generated by self-reported visual-auditory congruences I created a music-picking decision tree using the Iterative Dichotomiser 3(ID3) algorithm. Once a photo is taken and classified, the app searches and fetches matched tracks using SoundCloud’s API. Then, the generated playlist is streamed over wi-fi using the SONOS Control API giving the BONKER UI playback control.

Try it out with your own photos: Expo Client

The SONOS control API is not public, so the source code is private. If you have questions or would like to contribute, please feel free to contact me.